Get updated via email on new publications or videos by following us on GoogleScholar, on our research blog

2025

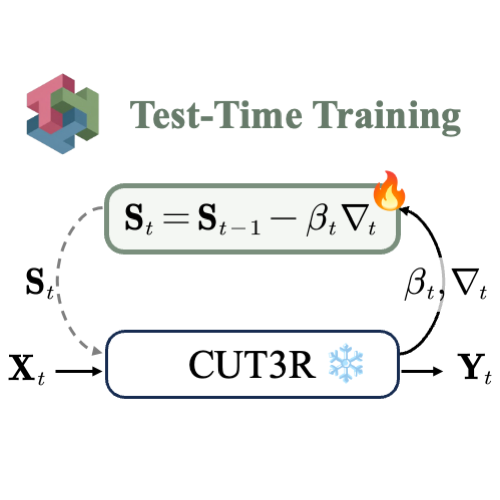

TTT3R: 3D Reconstruction as Test-Time Training

X. Chen, Y. Chen, Y. Xiu, A. Geiger, A. Chen

ARXIV, 2025

X. Chen, Y. Chen, Y. Xiu, A. Geiger, A. Chen

ARXIV, 2025

TL;DR: A simple state update rule to enhance length generalization for CUT3R.

Abstract: Modern Recurrent Neural Networks have become a competitive architecture for 3D reconstruction due to their linear-time complexity. However, their performance degrades significantly when applied beyond the training context length, revealing limited length generalization. In this work, we revisit the 3D reconstruction foundation models from a Test-Time Training perspective, framing their designs as an online learning problem. Building on this perspective, we leverage the alignment confidence between the memory state and incoming observations to derive a closed-form learning rate for memory updates, to balance between retaining historical information and adapting to new observations. This training-free intervention, termed TTT3R, substantially improves length generalization, achieving a 2× improvement in global pose estimation over baselines, all while operating at 20 FPS with just 6 GB of GPU memory to process thousands of images.

Latex Bibtex Citation:

@inproceedings{Chen2025TTT3R,

author = {Xingyu Chen, Yue Chen, Yuliang Xiu, Andreas Geiger, Anpei Chen},

title = {TTT3R: 3D Reconstruction as Test-Time Training},

booktitle = {ARXIV},

year = {2025}

}

Abstract: Modern Recurrent Neural Networks have become a competitive architecture for 3D reconstruction due to their linear-time complexity. However, their performance degrades significantly when applied beyond the training context length, revealing limited length generalization. In this work, we revisit the 3D reconstruction foundation models from a Test-Time Training perspective, framing their designs as an online learning problem. Building on this perspective, we leverage the alignment confidence between the memory state and incoming observations to derive a closed-form learning rate for memory updates, to balance between retaining historical information and adapting to new observations. This training-free intervention, termed TTT3R, substantially improves length generalization, achieving a 2× improvement in global pose estimation over baselines, all while operating at 20 FPS with just 6 GB of GPU memory to process thousands of images.

Latex Bibtex Citation:

@inproceedings{Chen2025TTT3R,

author = {Xingyu Chen, Yue Chen, Yuliang Xiu, Andreas Geiger, Anpei Chen},

title = {TTT3R: 3D Reconstruction as Test-Time Training},

booktitle = {ARXIV},

year = {2025}

}

Human3R: Everyone Everywhere All at Once

Y. Chen, X. Chen, Y. Xue, A. Chen, Y. Xiu, G. Pons-Moll

ARXIV, 2025

Y. Chen, X. Chen, Y. Xue, A. Chen, Y. Xiu, G. Pons-Moll

ARXIV, 2025

TL;DR: Inference with One model, One stage; Training in One day using One GPU.

Abstract: We present Human3R, a unified, feed-forward framework for online 4D human-scene reconstruction, in the world frame, from casually captured monocular videos. Unlike previous approaches that rely on multi-stage pipelines, iterative contact-aware refinement between humans and scenes, and heavy dependencies, e.g., human detection, depth estimation, and SLAM pre-processing, Human3R jointly recovers global multi-person SMPL-X bodies ("everyone"), dense 3D scene ("everywhere"), and camera trajectories in a single forward pass ("all-at-once"). Our method builds upon the 4D online reconstruction model CUT3R, and uses parameter-efficient visual prompt tuning, to strive to preserve CUT3R's rich spatiotemporal priors, while enabling direct readout of multiple SMPL-X bodies. Human3R is a unified model that eliminates heavy dependencies and iterative refinement. After being trained on the relatively small-scale synthetic dataset BEDLAM for just one day on one GPU, it achieves superior performance with remarkable efficiency: it reconstructs multiple humans in a one-shot manner, along with 3D scenes, in one stage, at real-time speed (15 FPS) with a low memory footprint (8 GB). Extensive experiments demonstrate that Human3R delivers state-of-the-art or competitive performance across tasks, including global human motion estimation, local human mesh recovery, video depth estimation, and camera pose estimation, with a single unified model. We hope that Human3R will serve as a simple yet strong baseline, be easily extended for downstream applications.

Latex Bibtex Citation:

@inproceedings{Chen2025Human3R,

author = {Yue Chen, Xingyu Chen, Yuxuan Xue, Anpei Chen, Yuliang Xiu, Gerard Pons-Moll},

title = {Human3R: Everyone Everywhere All at Once},

booktitle = {ARXIV},

year = {2025}

}

Abstract: We present Human3R, a unified, feed-forward framework for online 4D human-scene reconstruction, in the world frame, from casually captured monocular videos. Unlike previous approaches that rely on multi-stage pipelines, iterative contact-aware refinement between humans and scenes, and heavy dependencies, e.g., human detection, depth estimation, and SLAM pre-processing, Human3R jointly recovers global multi-person SMPL-X bodies ("everyone"), dense 3D scene ("everywhere"), and camera trajectories in a single forward pass ("all-at-once"). Our method builds upon the 4D online reconstruction model CUT3R, and uses parameter-efficient visual prompt tuning, to strive to preserve CUT3R's rich spatiotemporal priors, while enabling direct readout of multiple SMPL-X bodies. Human3R is a unified model that eliminates heavy dependencies and iterative refinement. After being trained on the relatively small-scale synthetic dataset BEDLAM for just one day on one GPU, it achieves superior performance with remarkable efficiency: it reconstructs multiple humans in a one-shot manner, along with 3D scenes, in one stage, at real-time speed (15 FPS) with a low memory footprint (8 GB). Extensive experiments demonstrate that Human3R delivers state-of-the-art or competitive performance across tasks, including global human motion estimation, local human mesh recovery, video depth estimation, and camera pose estimation, with a single unified model. We hope that Human3R will serve as a simple yet strong baseline, be easily extended for downstream applications.

Latex Bibtex Citation:

@inproceedings{Chen2025Human3R,

author = {Yue Chen, Xingyu Chen, Yuxuan Xue, Anpei Chen, Yuliang Xiu, Gerard Pons-Moll},

title = {Human3R: Everyone Everywhere All at Once},

booktitle = {ARXIV},

year = {2025}

}

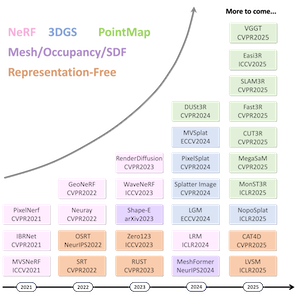

Advances in Feed-Forward 3D Reconstruction and View Synthesis

J. Zhang, Y. Li, A. Chen, M. Xu, K. Liu, J. Wang, X. Long, H. Liang, Z. Xu, H. Su, C. Theobalt, C. Rupprecht, A. Vedaldi, H. Pfister, S. Lu, F. Zhan

ARXIV, 2025

J. Zhang, Y. Li, A. Chen, M. Xu, K. Liu, J. Wang, X. Long, H. Liang, Z. Xu, H. Su, C. Theobalt, C. Rupprecht, A. Vedaldi, H. Pfister, S. Lu, F. Zhan

ARXIV, 2025

Abstract: 3D reconstruction and view synthesis are foundational problems in computer vision, graphics, and immersive technologies such as augmented reality (AR), virtual reality (VR), and digital twins. Traditional methods rely on computationally intensive iterative optimization in a complex chain, limiting their applicability in real-world scenarios. Recent advances in feed-forward approaches, driven by deep learning, have revolutionized this field by enabling fast and generalizable 3D reconstruction and view synthesis. This survey offers a comprehensive review of feed-forward techniques for 3D reconstruction and view synthesis, with a taxonomy according to the underlying representation architectures including point cloud, 3D Gaussian Splatting (3DGS), Neural Radiance Fields (NeRF), etc. We examine key tasks such as pose-free reconstruction, dynamic 3D reconstruction, and 3D-aware image and video synthesis, highlighting their applications in digital humans, SLAM, robotics, and beyond. In addition, we review commonly used datasets with detailed statistics, along with evaluation protocols for various downstream tasks. We conclude by discussing open research challenges and promising directions for future work, emphasizing the potential of feed-forward approaches to advance the state of the art in 3D vision.

Latex Bibtex Citation:

@inproceedings{Zhang2025Feedforward,

author = {Jiahui Zhang, Yuelei Li, Anpei Chen, Muyu Xu, Kunhao Liu, Jianyuan Wang, Xiao-Xiao Long, Hanxue Liang, Zexiang Xu, Hao Su, Christian Theobalt, Christian Rupprecht, Andrea Vedaldi, Hanspeter Pfister, Shijian Lu, Fangneng Zhan},

title = {Advances in Feed-Forward 3D Reconstruction and View Synthesis},

booktitle = {ARXIV},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Zhang2025Feedforward,

author = {Jiahui Zhang, Yuelei Li, Anpei Chen, Muyu Xu, Kunhao Liu, Jianyuan Wang, Xiao-Xiao Long, Hanxue Liang, Zexiang Xu, Hao Su, Christian Theobalt, Christian Rupprecht, Andrea Vedaldi, Hanspeter Pfister, Shijian Lu, Fangneng Zhan},

title = {Advances in Feed-Forward 3D Reconstruction and View Synthesis},

booktitle = {ARXIV},

year = {2025}

}

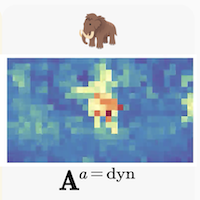

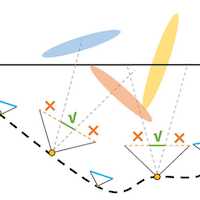

Easi3R: Estimating Disentangled Motion from DUSt3R Without Training

X. Chen, Y. Chen, Y. Xiu, A. Geiger, A. Chen

International Conference on Computer Vision (ICCV), 2025

X. Chen, Y. Chen, Y. Xiu, A. Geiger, A. Chen

International Conference on Computer Vision (ICCV), 2025

TL;DR: A simple training-free approach adapting DUSt3R for dynamic scenes.

Abstract: Recent advances in DUSt3R have enabled robust estimation of dense point clouds and camera parameters of static scenes, leveraging Transformer network architectures and direct supervision on large-scale 3D datasets. In contrast, the limited scale and diversity of available 4D datasets present a major bottleneck for training a highly generalizable 4D model. This constraint has driven conventional 4D methods to fine-tune 3D models on scalable dynamic video data with additional geometric priors such as optical flow and depths. In this work, we take an opposite path and introduce Easi3R, a simple yet efficient training-free method for 4D reconstruction. Our approach applies attention adaptation during inference, eliminating the need for from-scratch pre-training or network fine-tuning. We find that the attention layers in DUSt3R inherently encode rich information about camera and object motion. By carefully disentangling these attention maps, we achieve accurate dynamic region segmentation, camera pose estimation, and 4D dense point map reconstruction. Extensive experiments on real-world dynamic videos demonstrate that our lightweight attention adaptation significantly outperforms previous state-of-the-art methods that are trained or finetuned on extensive dynamic datasets.

Latex Bibtex Citation:

@inproceedings{Chen2025Easi3r,

author = {Xingyu Chen and Yue Chen and Yuliang Xiu and Andreas Geiger and Anpei Chen},

title = {Easi3R: Estimating Disentangled Motion from DUSt3R Without Training},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Abstract: Recent advances in DUSt3R have enabled robust estimation of dense point clouds and camera parameters of static scenes, leveraging Transformer network architectures and direct supervision on large-scale 3D datasets. In contrast, the limited scale and diversity of available 4D datasets present a major bottleneck for training a highly generalizable 4D model. This constraint has driven conventional 4D methods to fine-tune 3D models on scalable dynamic video data with additional geometric priors such as optical flow and depths. In this work, we take an opposite path and introduce Easi3R, a simple yet efficient training-free method for 4D reconstruction. Our approach applies attention adaptation during inference, eliminating the need for from-scratch pre-training or network fine-tuning. We find that the attention layers in DUSt3R inherently encode rich information about camera and object motion. By carefully disentangling these attention maps, we achieve accurate dynamic region segmentation, camera pose estimation, and 4D dense point map reconstruction. Extensive experiments on real-world dynamic videos demonstrate that our lightweight attention adaptation significantly outperforms previous state-of-the-art methods that are trained or finetuned on extensive dynamic datasets.

Latex Bibtex Citation:

@inproceedings{Chen2025Easi3r,

author = {Xingyu Chen and Yue Chen and Yuliang Xiu and Andreas Geiger and Anpei Chen},

title = {Easi3R: Estimating Disentangled Motion from DUSt3R Without Training},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models

H. Huang, A. Chen, V. Havrylov, A. Geiger, D. Zhang

International Conference on Computer Vision (ICCV), 2025 Oral

H. Huang, A. Chen, V. Havrylov, A. Geiger, D. Zhang

International Conference on Computer Vision (ICCV), 2025 Oral

Abstract: Vision foundation models (VFMs) such as DINOv2 and CLIP have achieved impressive results on various downstream tasks, but their limited feature resolution hampers performance in applications requiring pixel-level understanding. Feature upsampling offers a promising direction to address this challenge. In this work, we identify two critical factors for enhancing feature upsampling: the upsampler architecture and the training objective. For the upsampler architecture, we introduce a coordinate-based cross-attention transformer that integrates the high-resolution images with coordinates and low-resolution VFM features to generate sharp, high-quality features. For the training objective, we propose constructing high-resolution pseudo-groundtruth features by leveraging class-agnostic masks and self-distillation. Our approach effectively captures fine-grained details and adapts flexibly to various input and feature resolutions. Through experiments, we demonstrate that our approach significantly outperforms existing feature upsampling techniques across various downstream tasks.

Latex Bibtex Citation:

@inproceedings{Huang2025LoftUp,

author = {Haiwen Huang and Anpei Chen and Volodymyr Havrylov and Andreas Geiger and Dan Zhang},

title = {LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Huang2025LoftUp,

author = {Haiwen Huang and Anpei Chen and Volodymyr Havrylov and Andreas Geiger and Dan Zhang},

title = {LoftUp: Learning a Coordinate-Based Feature Upsampler for Vision Foundation Models},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Neural Shell Texture Splatting: More Details and Fewer Primitives

X. Zhang, A. Chen, J. Xiong, P. Dai, Y. Shen, W. Xu

International Conference on Computer Vision (ICCV), 2025

X. Zhang, A. Chen, J. Xiong, P. Dai, Y. Shen, W. Xu

International Conference on Computer Vision (ICCV), 2025

Abstract: Gaussian splatting techniques have shown promising results in novel view synthesis, achieving high fidelity and efficiency. However, their high reconstruction quality comes at the cost of requiring a large number of primitives. We identify this issue as stemming from the entanglement of geometry and appearance in Gaussian Splatting. To address this, we introduce a neural shell texture, a global representation that encodes texture information around the surface. We use Gaussian primitives as both a geometric representation and texture field samplers, efficiently splatting texture features into image space. Our evaluation demonstrates that this disentanglement enables high parameter efficiency, fine texture detail reconstruction, and easy textured mesh extraction, all while using significantly fewer primitives.

Latex Bibtex Citation:

@inproceedings{Zhang2025NeST,

author = {Xin Zhang and Anpei Chen and Jincheng Xiong and Pinxuan Dai and Yujun Shen and Weiwei Xu},

title = {Neural Shell Texture Splatting: More Details and Fewer Primitives},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Zhang2025NeST,

author = {Xin Zhang and Anpei Chen and Jincheng Xiong and Pinxuan Dai and Yujun Shen and Weiwei Xu},

title = {Neural Shell Texture Splatting: More Details and Fewer Primitives},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2025}

}

GaVS: 3D-Grounded Video Stabilization via Temporally-Consistent Local Reconstruction and Rendering

Z. You, S. Georgoulis, A. Chen, S. Tang, D. Dai

Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH), 2025

Z. You, S. Georgoulis, A. Chen, S. Tang, D. Dai

Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH), 2025

Abstract: Video stabilization is pivotal for video processing, as it removes unwanted shakiness while preserving the original user motion intent. Existing approaches, depending on the domain they operate, suffer from several issues (e.g. geometric distortions, excessive cropping, poor generalization) that degrade the user experience. To address these issues, we introduce GaVS, a novel 3D-grounded approach that reformulates video stabilization as a temporally-consistent `local reconstruction and rendering' paradigm. Given 3D camera poses, we augment a reconstruction model to predict Gaussian Splatting primitives, and finetune it at test-time, with multi-view dynamics-aware photometric supervision and cross-frame regularization, to produce temporally-consistent local reconstructions. The model are then used to render each stabilized frame. We utilize a scene extrapolation module to avoid frame cropping. Our method is evaluated on a repurposed dataset, instilled with 3D-grounded information, covering samples with diverse camera motions and scene dynamics. Quantitatively, our method is competitive with or superior to state-of-the-art 2D and 2.5D approaches in terms of conventional task metrics and new geometry consistency. Qualitatively, our method produces noticeably better results compared to alternatives, validated by the user study.

Latex Bibtex Citation:

@inproceedings{Zhang2025NeST,

author = {Zinuo You and Stamatios Georgoulis and Anpei Chen and Siyu Tang and Dengxin Dai},

title = {GaVS: 3D-Grounded Video Stabilization via Temporally-Consistent Local Reconstruction and Rendering},

booktitle = {Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH) 2025},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Zhang2025NeST,

author = {Zinuo You and Stamatios Georgoulis and Anpei Chen and Siyu Tang and Dengxin Dai},

title = {GaVS: 3D-Grounded Video Stabilization via Temporally-Consistent Local Reconstruction and Rendering},

booktitle = {Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH) 2025},

year = {2025}

}

GenFusion: Closing the Loop between Reconstruction and Generation via Videos

S. Wu, C. Xu, B. Huang, A. Geiger, A. Chen

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

S. Wu, C. Xu, B. Huang, A. Geiger, A. Chen

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

TL;DR: Gen Fusion = Video Generation + RGB-D Fusion.

Abstract: Recently, 3D reconstruction and generation have demonstrated impressive novel view synthesis results, achieving high fidelity and efficiency. However, a notable conditioning gap can be observed between these two fields, e.g., scalable 3D scene reconstruction often requires densely captured views, whereas 3D generation typically relies on a single or no input view, which significantly limits their applications. We found that the source of this phenomenon lies in the misalignment between 3D constraints and generative priors. To address this problem, we propose a reconstruction-driven video diffusion model that learns to condition video frames on artifact-prone RGB-D renderings. Moreover, we propose a cyclical fusion pipeline that iteratively adds restoration frames from the generative model to the training set, enabling progressive expansion and addressing the viewpoint saturation limitations seen in previous reconstruction and generation pipelines. Our evaluation, including view synthesis from sparse view and masked input, validates the effectiveness of our approach.

Latex Bibtex Citation:

@inproceedings{Wu2025GenFusion,

author = {Sibo Wu and Congrong Xu and Binbin Huang and Geiger Andreas and Anpei Chen},

title = {GenFusion: Closing the Loop between Reconstruction and Generation via Videos},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Abstract: Recently, 3D reconstruction and generation have demonstrated impressive novel view synthesis results, achieving high fidelity and efficiency. However, a notable conditioning gap can be observed between these two fields, e.g., scalable 3D scene reconstruction often requires densely captured views, whereas 3D generation typically relies on a single or no input view, which significantly limits their applications. We found that the source of this phenomenon lies in the misalignment between 3D constraints and generative priors. To address this problem, we propose a reconstruction-driven video diffusion model that learns to condition video frames on artifact-prone RGB-D renderings. Moreover, we propose a cyclical fusion pipeline that iteratively adds restoration frames from the generative model to the training set, enabling progressive expansion and addressing the viewpoint saturation limitations seen in previous reconstruction and generation pipelines. Our evaluation, including view synthesis from sparse view and masked input, validates the effectiveness of our approach.

Latex Bibtex Citation:

@inproceedings{Wu2025GenFusion,

author = {Sibo Wu and Congrong Xu and Binbin Huang and Geiger Andreas and Anpei Chen},

title = {GenFusion: Closing the Loop between Reconstruction and Generation via Videos},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Ref-GS: Directional Factorization for 2D Gaussian Splatting

Y. Zhang, A. Chen, Y. Wan, Z. Song, J. Yu, Y. Luo, W. Yang

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Y. Zhang, A. Chen, Y. Wan, Z. Song, J. Yu, Y. Luo, W. Yang

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Abstract: In this paper, we introduce Ref-GS, a novel approach for directional light factorization in 2D Gaussian splatting, which enables photorealistic view-dependent appearance rendering and precise geometry recovery. Ref-GS builds upon the deferred rendering of Gaussian splatting and applies directional encoding to the deferred-rendered surface, effectively reducing the ambiguity between orientation and viewing angle. Next, we introduce a spherical mip-grid to capture varying levels of surface roughness, enabling roughness-aware Gaussian shading. Additionally, we propose a simple yet efficient geometry-lighting factorization that connects geometry and lighting via the vector outer product, significantly reducing renderer overhead when integrating volumetric attributes. Our method achieves superior photorealistic rendering for a range of open-world scenes while also accurately recovering geometry.

Latex Bibtex Citation:

@inproceedings{Zhang2025Refgs,

author = {Youjia Zhang and Anpei Chen and Yumin Wan and Zikai Song and Junqing Yu and Yawei Luo and Wei Yang},

title = {Ref-GS: Directional Factorization for 2D Gaussian Splatting},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Latex Bibtex Citation:

@inproceedings{Zhang2025Refgs,

author = {Youjia Zhang and Anpei Chen and Yumin Wan and Zikai Song and Junqing Yu and Yawei Luo and Wei Yang},

title = {Ref-GS: Directional Factorization for 2D Gaussian Splatting},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Feat2GS: Probing Visual Foundation Models with Gaussian Splatting

Y. Chen, X. Chen, A. Chen, G. Pons-Moll and Y. Xiu

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

Y. Chen, X. Chen, A. Chen, G. Pons-Moll and Y. Xiu

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

TL;DR: A unified framework to probe “texture and geometry awareness” of visual foundation models.

Abstract: Given that visual foundation models (VFMs) are trained on extensive datasets but often limited to 2D images, a natural question arises: how well do they understand the 3D world? With the differences in architecture and training protocols (i.e., objectives, proxy tasks), a unified framework to fairly and comprehensively probe their 3D awareness is urgently needed. Existing works on 3D probing suggest single-view 2.5D estimation (e.g., depth and normal) or two-view sparse 2D correspondence (e.g., matching and tracking). Unfortunately, these tasks ignore texture awareness, and require 3D data as ground-truth, which limits the scale and diversity of their evaluation set. To address these issues, we introduce Feat2GS, which readout 3D Gaussians attributes from VFM features extracted from unposed images. This allows us to probe 3D awareness for geometry and texture via novel view synthesis, without requiring 3D data. Additionally, the disentanglement of 3DGS parameters - geometry (x,α,Σ) and texture (c) - enables separate analysis of texture and geometry awareness. Under Feat2GS, we conduct extensive experiments to probe the 3D awareness of several VFMs, and investigate the ingredients that lead to a 3D aware VFM. Building on these findings, we develop several variants that achieve state-of-the-art across diverse datasets. This makes Feat2GS useful for probing VFMs, and as a simple-yet-effective baseline for novel-view synthesis.

Latex Bibtex Citation:

@inproceedings{Chen2025Probing,

author = {Yue Chen and Xingyu Chen and Anpei Chen and Gerard Pons-Moll and Yuliang Xiu},

title = {Feat2GS: Probing Visual Foundation Models with Gaussian Splatting},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Abstract: Given that visual foundation models (VFMs) are trained on extensive datasets but often limited to 2D images, a natural question arises: how well do they understand the 3D world? With the differences in architecture and training protocols (i.e., objectives, proxy tasks), a unified framework to fairly and comprehensively probe their 3D awareness is urgently needed. Existing works on 3D probing suggest single-view 2.5D estimation (e.g., depth and normal) or two-view sparse 2D correspondence (e.g., matching and tracking). Unfortunately, these tasks ignore texture awareness, and require 3D data as ground-truth, which limits the scale and diversity of their evaluation set. To address these issues, we introduce Feat2GS, which readout 3D Gaussians attributes from VFM features extracted from unposed images. This allows us to probe 3D awareness for geometry and texture via novel view synthesis, without requiring 3D data. Additionally, the disentanglement of 3DGS parameters - geometry (x,α,Σ) and texture (c) - enables separate analysis of texture and geometry awareness. Under Feat2GS, we conduct extensive experiments to probe the 3D awareness of several VFMs, and investigate the ingredients that lead to a 3D aware VFM. Building on these findings, we develop several variants that achieve state-of-the-art across diverse datasets. This makes Feat2GS useful for probing VFMs, and as a simple-yet-effective baseline for novel-view synthesis.

Latex Bibtex Citation:

@inproceedings{Chen2025Probing,

author = {Yue Chen and Xingyu Chen and Anpei Chen and Gerard Pons-Moll and Yuliang Xiu},

title = {Feat2GS: Probing Visual Foundation Models with Gaussian Splatting},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}